We are excited to introduce Laminar AI, a platform designed for developers, especially in the AI and DevOps space, to build, monitor, and deploy reliable LLM (Large Language Model) applications 10x faster.

What is Laminar AI?

Laminar AI is an all-in-one platform that combines orchestration, evaluations, data management, and observability into a single experience. Whether you are an SRE, DevOps engineer, or working with AI infrastructure, Laminar simplifies the complexities of developing and scaling LLM agents by providing robust tools to accelerate iteration, observability, and analytics.

Key Features for SREs and DevOps:

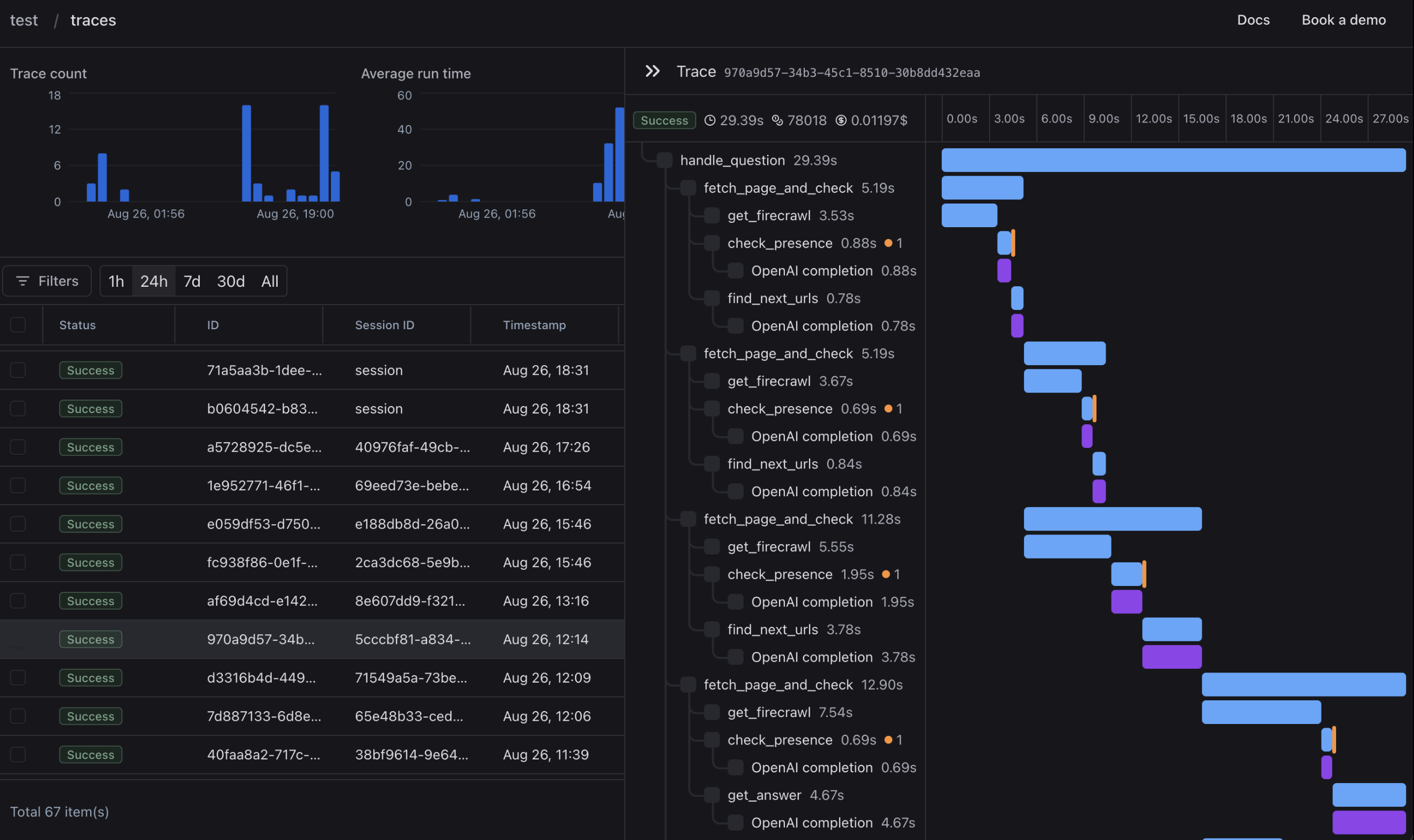

1. Observability:

Track the full execution trace of your LLM applications seamlessly. With simple instrumentation using Python decorators (or function wrapping for JavaScript/TypeScript), Laminar allows you to maintain deep visibility across all stages of your LLM pipelines. This includes OpenTelemetry tracing integration via OpenLLMetry, ensuring that you can easily integrate it into your existing observability stack.

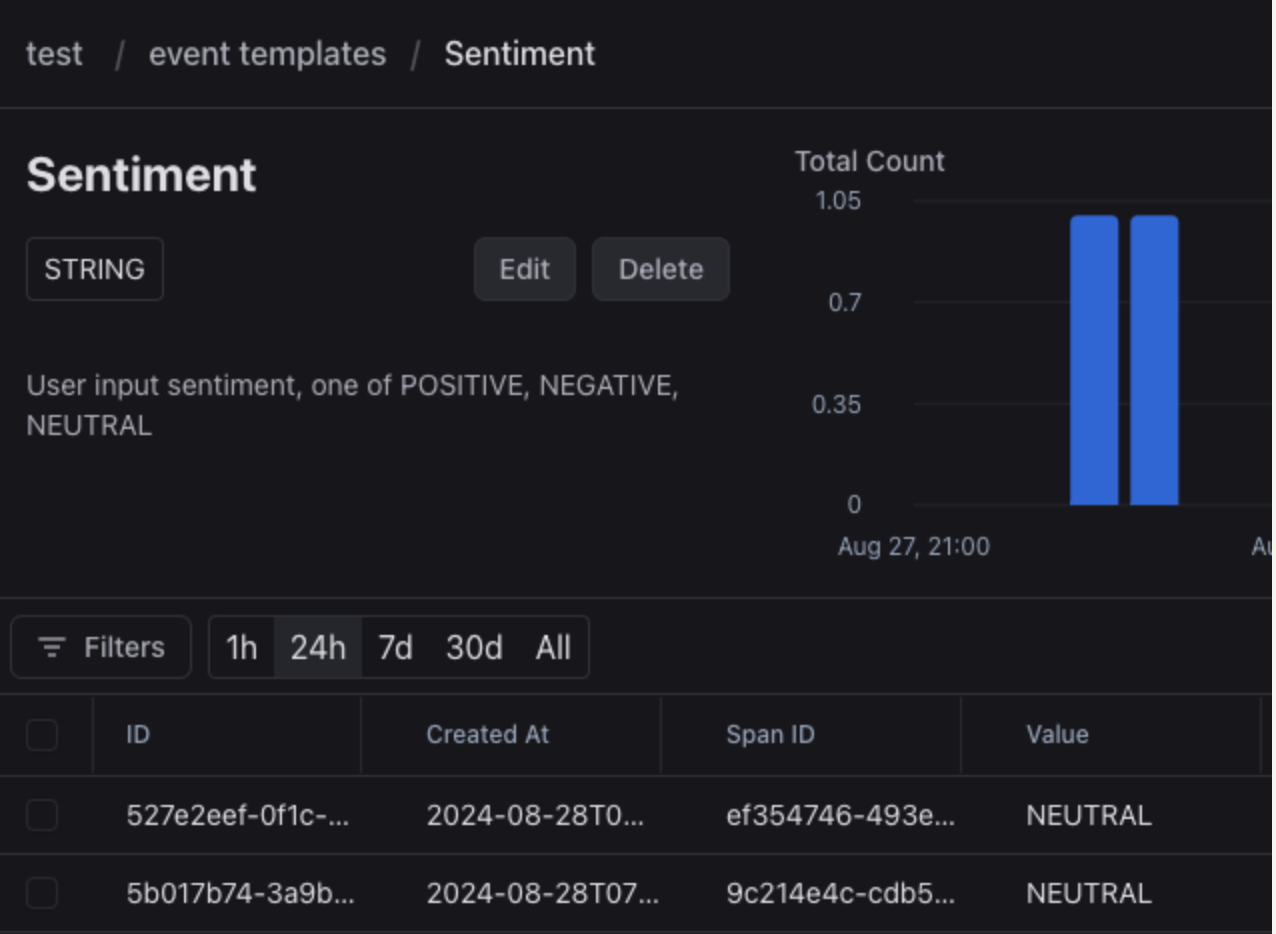

2. Analytics:

Extract actionable insights from your LLM models by capturing semantic events such as user sentiment or behavior. Laminar converts these into trackable metrics, which you can correlate with trace data, giving you visibility into user interaction and performance metrics.

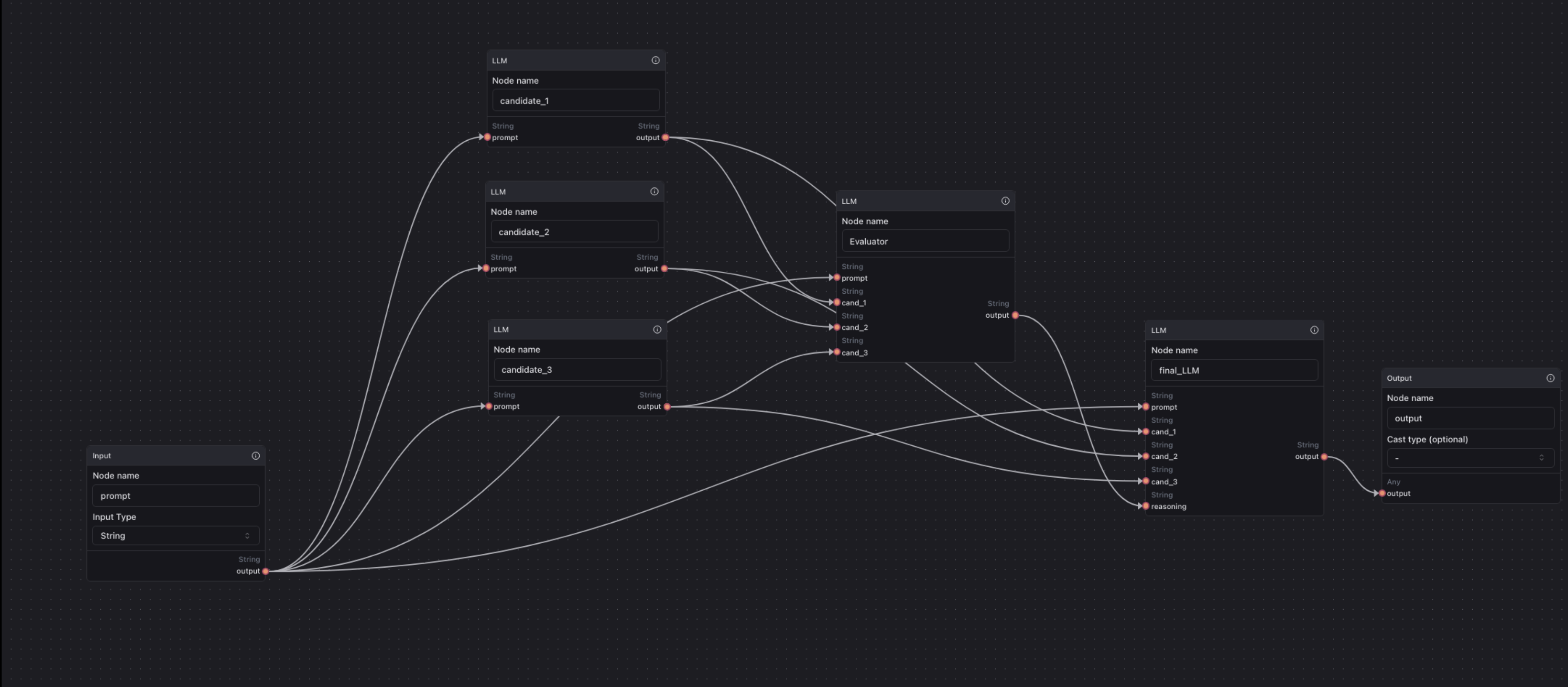

3. Prompt Chain Management:

Build and manage chains of prompts and LLM models as though they were single functions. This is crucial for experimentation with advanced techniques like Mixture of Agents or self-reflecting agents without embedding them directly into your codebase.

4. Evaluations:

Run and analyze detailed evaluations directly through the Laminar dashboard. Using JavaScript or Python SDKs, you can set up and conduct evaluations with ease, making the process fully integrated into your development pipeline.

- SRE Focus: Automating evaluations helps streamline the testing process, ensuring performance benchmarks are met while minimizing infrastructure overhead.

Why This Matters for SREs and DevOps:

- Increased Observability: With OpenLLMetry, Laminar provides complete traceability for LLM pipelines, helping you monitor performance, uptime, and troubleshoot issues in real-time.

- Actionable Analytics: Understand user behaviors and outcomes with Laminar’s robust semantic event extraction, giving you critical insight into how users interact with your applications.

- Fast Iteration: The prompt chain management feature allows rapid experimentation and operational efficiency, reducing friction in your development lifecycle.

Get Started Today:

Ready to build reliable LLM agents with Laminar AI? Start your journey now:

We invite SREs, DevOps, and Observability experts to share their insights and feedback with us. Let’s work together to make LLM applications more reliable and efficient!